Seven Months

That’s how long it took for our Machine Learning and Artificial Intelligence upscaling computer to process all the Pandora Directive source tapes. Seven long months of non-stop rendering. Using some of the latest AI models for upscaling, the AI box (or TexBox as we have dubbed it), which doubled as an effective space heater during this time, performed around-the-clock processing of the digitized tapes frame by frame from interlaced SD video into progressive 4K at 60fps.

This morning, the last frame of the last video completed for a total of just under 8TB of data and the results so far speak for themselves…

“Pop the champagne and celebrate” you may be saying?

Well, not quite. A LOT can change over seven months, particularly in the world of technology. The AI models that yielded excellent results are constantly improving. But that’s not everything. Let’s go back to the beginning first…

Beta maxxed

You may recall a previous article where we had issues capturing the “B Camera” tapes and had to acquire all-new equipment to successfully dub over the MII format tapes? Well, those digitizations turned out so spectacular we went back to the original “A Camera” (Beta SP) tapes (that were dubbed a couple of years prior) and did a comparison. As it turns out, the “A Camera” tapes (arguably the more important of the two sets), appeared a bit “soft” by comparison. So, we dug the Beta SPs out of storage again and took them back to the production house for a second run, this time using some all new digital equipment they had recently acquired…

The results of our second attempt at capturing the Beta SP “A Camera” tapes were a vast improvement. It was virtually night and day…

After factoring in what it would cost to get the “A Camera” tapes captured a second time (both financially and in terms of timing), then comparing the two outputs, we were extremely happy we decided to perform a second pass of the video capture on the new gear.

It was at this stage that we got to work on upscaling all the tapes at once. Our TexBox churned through almost 74 hours of video over the course of seven months, and while it did a fantastic job of upscaling the content to 4K, there were some issues that stood out after the machine had completed its task.

There’s no such thing as a one-size-fits-all solution

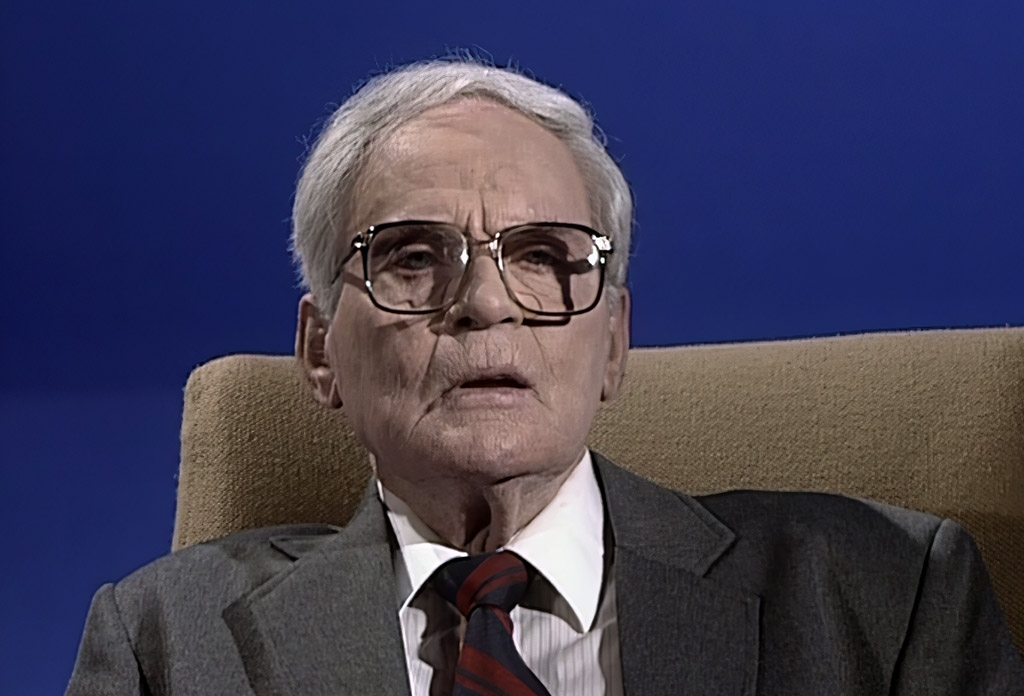

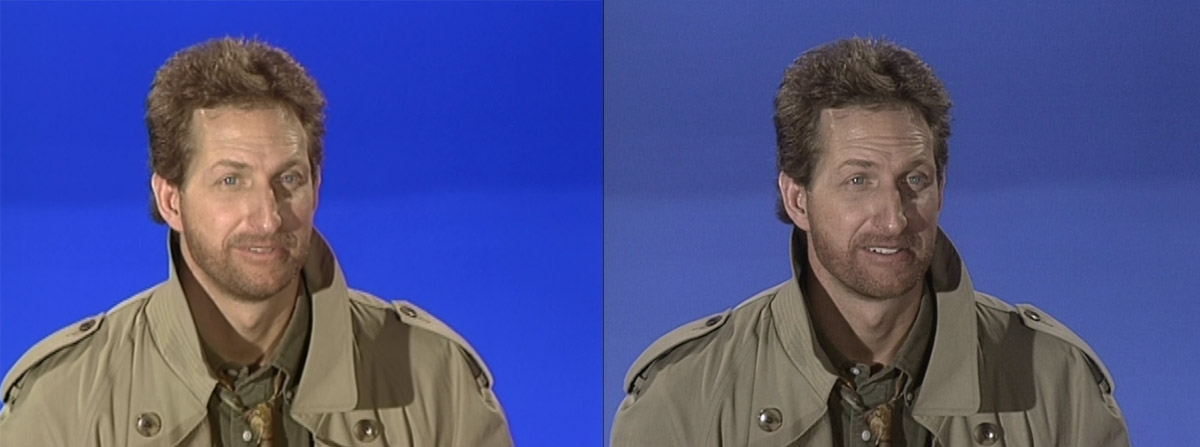

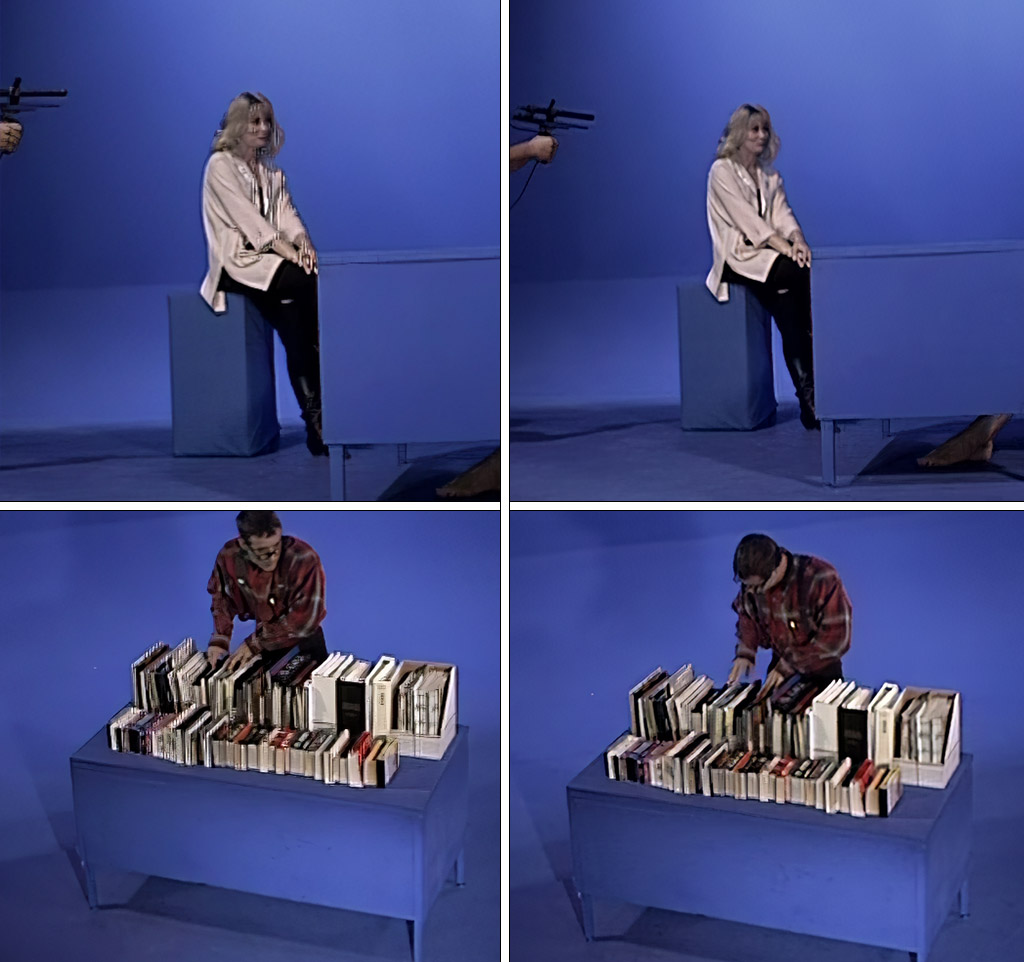

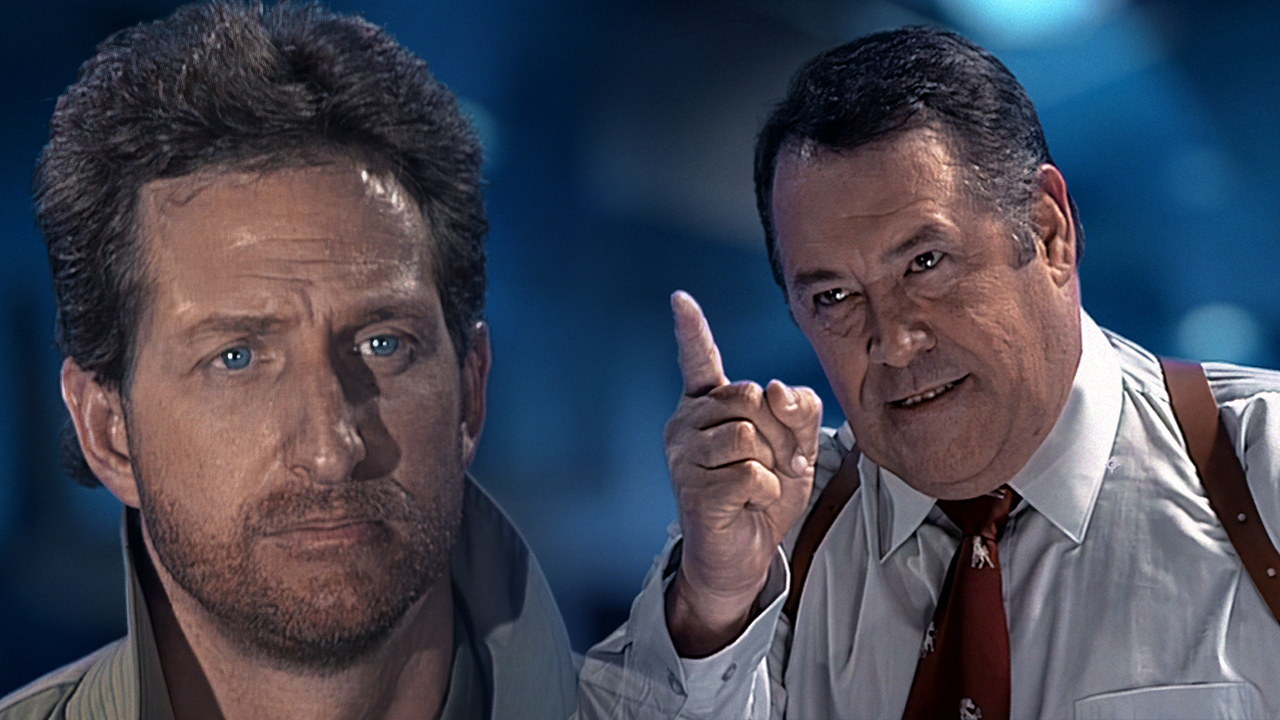

The AI algorithms and models may be fantastic in general but rarely does one model excel at every type of content all at once. In our case, we found one model, which yielded the most crisp 4K output (see the individual actor images above), did extremely well with shots where the actors were either close or medium distance from the camera, but failed for any subjects a certain distance away and beyond. And for another model, although closer subjects were not as crisp, distant subjects showed up much cleaner.

So, how do we switch back and forth between the two models to try and get the best of both worlds? Put simply: We don’t. We render them all twice! Once with Model A and once with Model B.

This is why, even though the machine has just completed 7-months worth of AI rendering, we are not celebrating just yet. This first render was *just* for Model A. Now we commence the process all over again for Model B. This way, we can pick and choose from two rendering outputs depending on which works best during the edit process, giving us the best of both worlds.

It’s a slow process, but we’re making progress

Despite the sheer amount of time it takes to process the video, we are confident this approach will yield the best result in the long-run. After all, there’s no sense approaching any of this half-baked. We’re also not spending all this rendering time just sitting around waiting for the computer to finish.

Doug Vandegrift has been working every day over those seven months re-creating every location from The Pandora Directive, and we will have some progress to showcase from those designs in a coming article/update. Adrian Carr can also commence (and already has) cutting together his new edits with the first pass. The rest of the team is working on virtually every other part of the process while our hard-working TexBox churns away in the background and racking up a hefty power bill in the process.

In the meantime, we thank you for your patience and allowing us the opportunity to take extra care in doing everything to best of our (and the technologies’) ability.