Is anybody up for round three?

Well, it turns out, the technology is continuously improving, and so is our expertise. As you would recall from one of our previous articles, we had elected to perform two passes of AI/machine learning upscaling on the Pandora Directive tapes. While both models yielded excellent results, they were designed specifically for handling interlaced footage, with which our source tapes are presented.

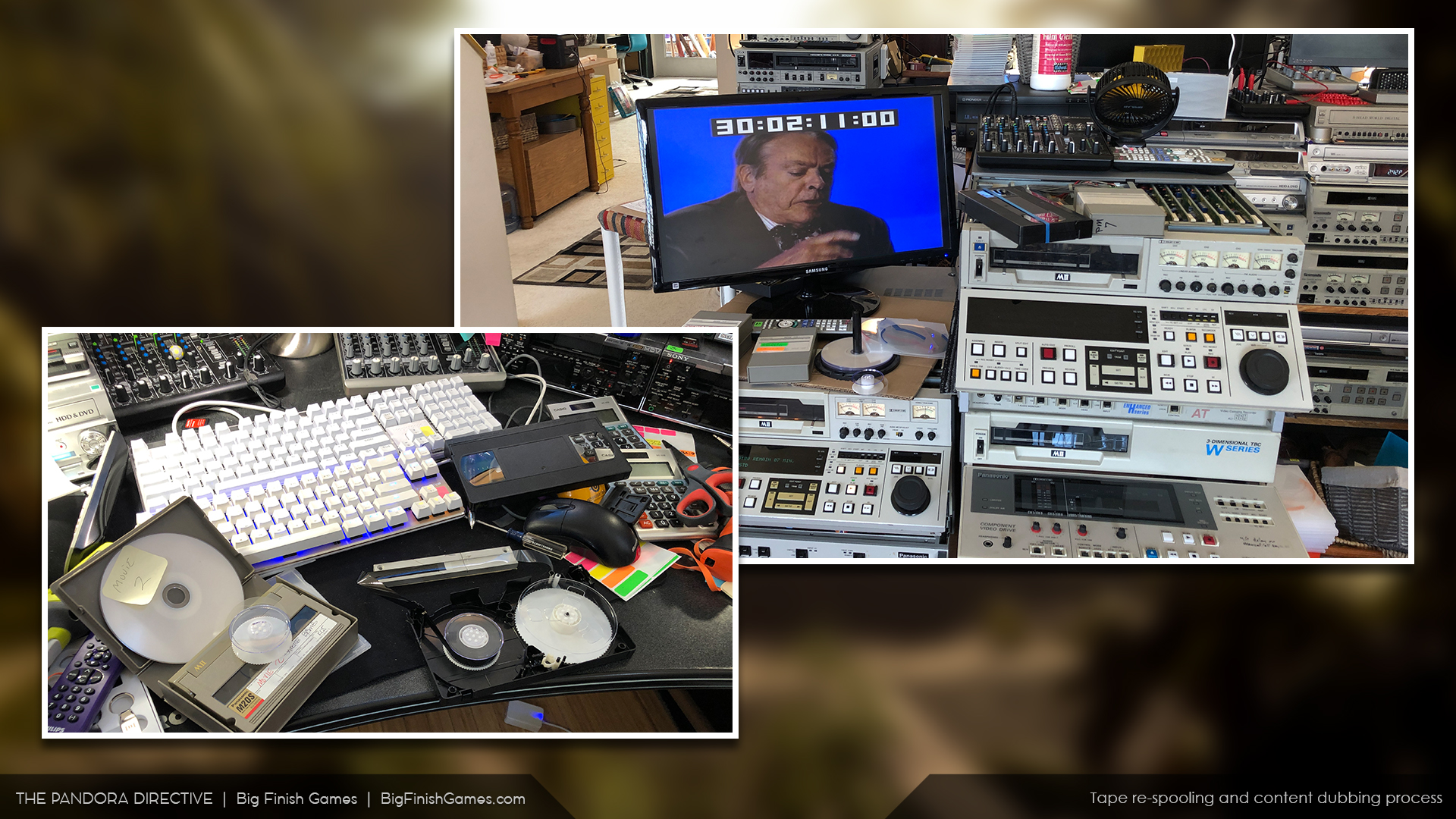

However, interlacing is one of those horrible beasts, a relic of the bygone era of limited-bandwidth broadcasting and lackluster management of industry standards. And that’s not say anything about the multiple tape formats used on the Pandora Directive shoot, each with its own specific format parameters! Some of them needed to be re-spooled before we could even view/capture them!

However, for all it’s strengths, AI/machine learning must not be considered a one-size-fits-all solution because the source material can be highly diverse. Furthermore, even within the same format (for example, BETA SP), the image can vary from tape-to-tape and shot-to-shot, and high-motion is a particularly troublesome beast. While both AI models did a great job, they could not handle all of the higher motion content very well, resulting in combing issues.

So, after investigating the issue further (and with the help of a couple of community members who happily volunteered some of their own insight and experiences), we explored a whole new method of deinterlacing the source videos.

C:\Pandora\Tex4.exe -v -a \v \i TFF D:\SourceTapes\Tape001.ergh

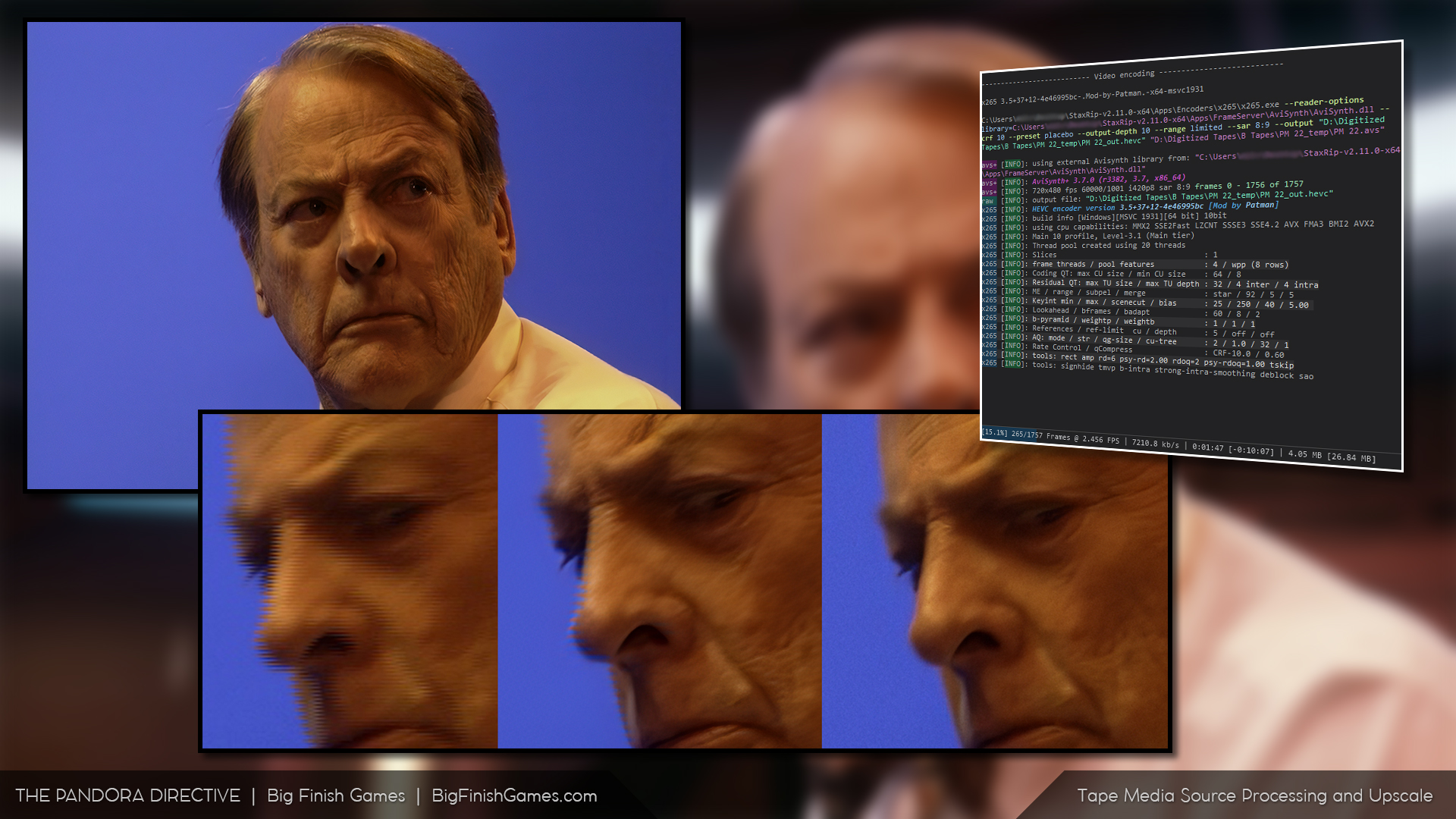

Returning to the command prompt led us down the path of using AVISynth to perform much more advanced deinterlacing of the source footage. We began testing the output, seeing if we would solve our combing issues and preserve the 60 full frames we were getting when the deinterlacing process was integrated into the AI upscaling procedure.

After playing with a few models and techniques, we settled on one particular procedure that appeared to solve our issues entirely…

Model C has entered the chat

Now that we had a pipeline in place for effectively deinterlacing the footage and recovering 60 full frames per second from the 480i source, we were now able to explore a whole new range of AI/machine learning models otherwise unavailable to us when we were directly interpreting interlaced footage.

This allowed us to get down to the nitty-gritty, tweaking more settings and parameters, eventually providing us with an even more superior output than Models A and B. Welcome to Model C…

We’re gonna need a bigger boat

I know what you’re thinking, and the answer is: yes, we do need to render the upscales again, for the third time. And yes, we needed to add even more hard drive space to The Pandora Device. We just added another 8TB of storage, so the machine now has 28 Terabytes of storage in total!

We can’t estimate (yet) how long it will take to A) run the more advanced deinterlacing process on all the tapes and B) render the deinterlaced tapes using Model C. But the good news is: there is nothing stopping us from taking Model A and Model B and using them to cut together the video and commence compositing while the machine is churning away. Adding Model C back into the mix (once it is done) should be a simple matter of switching out the proxies with the newly processed videos. We hope.

We’ve come a long way

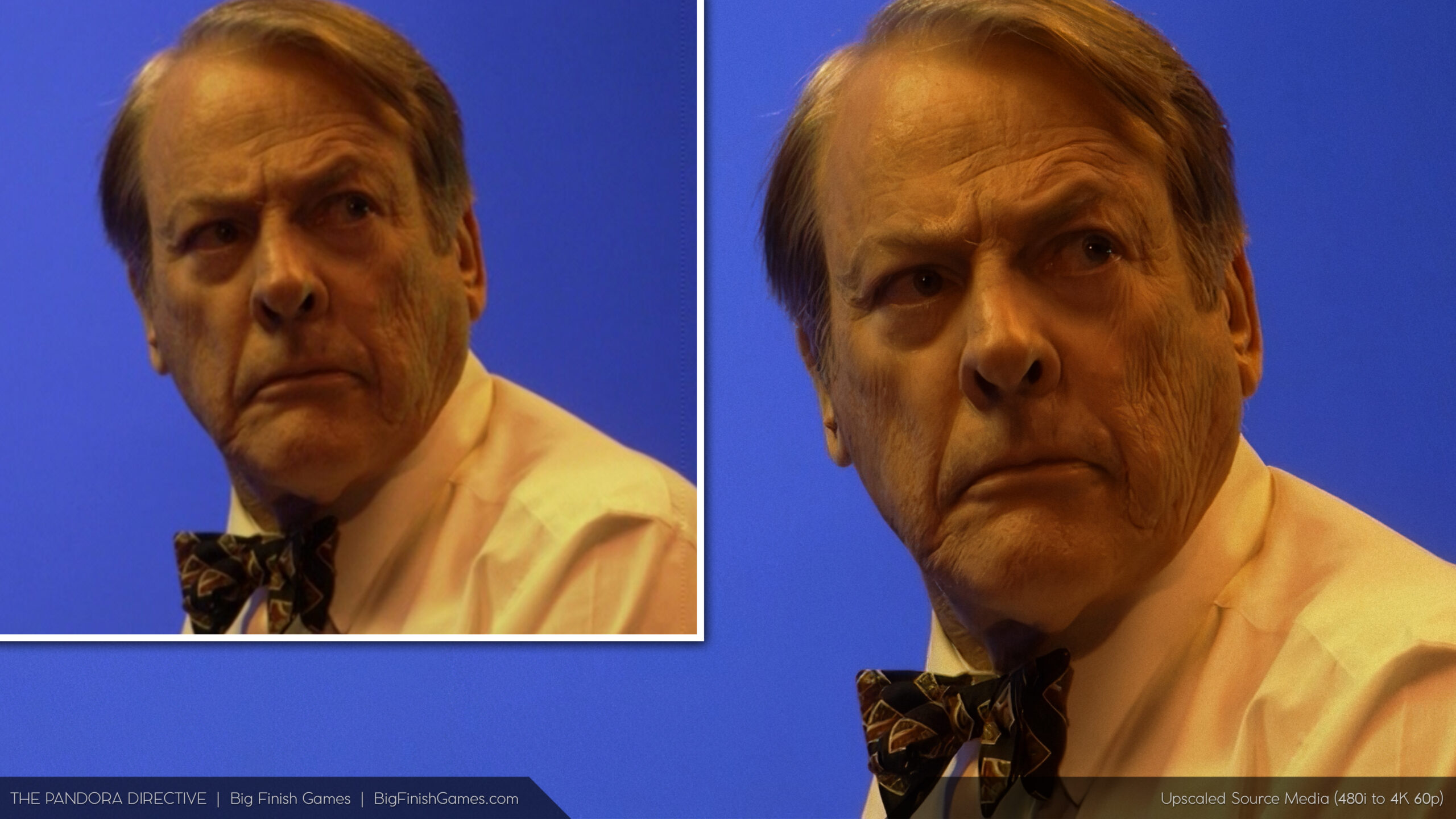

From tiny letterboxed video on a 4:3 monitor, presented at 10 frames per second and in 256 colors, it’s still an absolute miracle we can even begin to explore presenting The Pandora Directive’s legendary full-motion video in up to 4K, completely remastered. On top of that, getting right down to the fine details and ensuring it looks like it was shot yesterday is some fantastic icing on the fedora!

We consider this project to be a benchmark of what is possible for old content remasters, which is why we are taking our time and are very focused on the task at hand. We’ve enjoyed sharing the process with you and look forward to continuing sharing the journey with you.

In the meantime, here’s another short clip showcasing the comparison between The Pandora Directive’s 1996 full-motion video and the remaster.

In this example, we wanted to really challenge the technology…

In the first shot with Fitzpatrick and Witt:

There were numerous challenges. Two subjects, each a varied distance from the camera (with Witt predominantly in focus and Fitzpatrick slightly soft). Also, Witt’s glasses, which are ordinarily very difficult to manage on chromakey due to color bleed, shifting, and refractions. Lastly, there’s the high motion of Witt’s arms as he speaks.

We were able to overcome all of these issues quite effectively. You may have also noticed we used an alternative angle for this example. One of the many benefits of going back to the source tapes is the ability to pick alternative angles and takes.

In the second shot with Tex:

We were dealing with more dramatic lighting cues, resulting in soft focus and potential color bleed on his hair.

This was also not a problem. As you can see, we were able to effectively key around his hair while also preserving the structure and details of individual strands. We also used the increased resolution to perform a slow zoom on the subject, which is another huge advantage, enabling us to utilize more advanced camera and editing techniques to enhance the atmosphere and style.